Systems Analysis

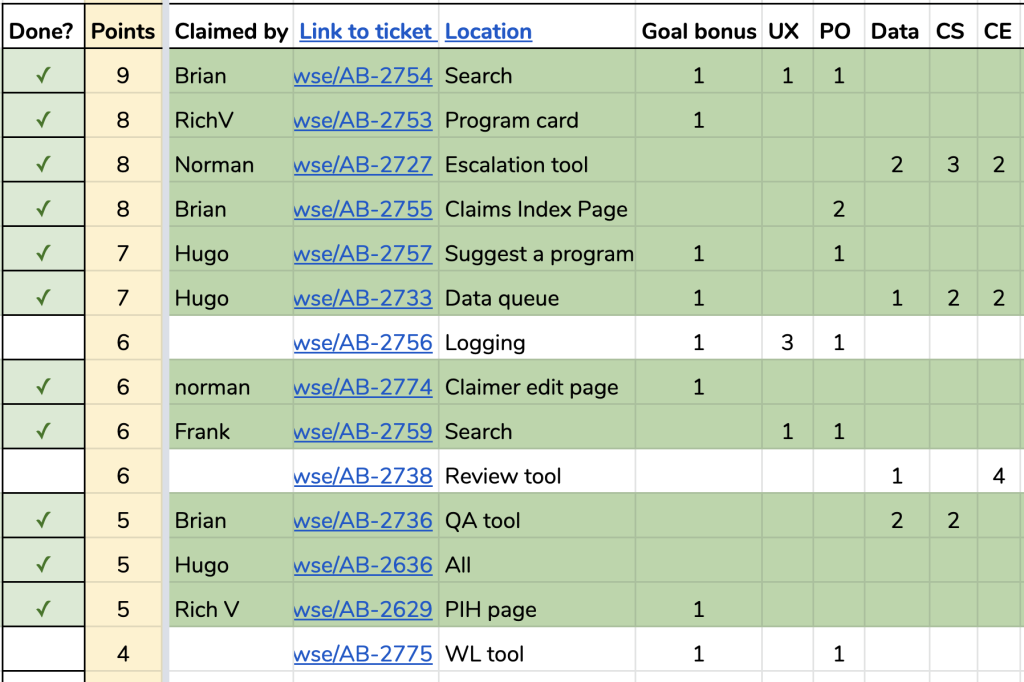

I designed a new system for aligning incentives to knock out the unglamourous 15 minute tasks that weren’t big enough to fight for on their own, but cumulatively provided massive value per minute of work. Dev Race was the result, a quarterly competition among the devs to rack up the most impact points for prizes in a 2 hour hackathon-like lunch, over tacos. The highest value-per-minute tickets always got done first, and each team and user reaped the rewards.

I built this Dev Race system to address our company’s complex prioritization problem. Countless small changes that would have the highest impact went under-resourced for years. Instead, teams had incentives to propose big projects. Knocking out a few developer-days of small tickets would generate more value than any four-week team-advocated project. But the value was distributed, and teams were trying to squeeze more dev hours out of their one quarterly project, and get more buy-in from leadership by projecting big-project-sized returns.

As I worked to understand the existing system, I discovered that two major disconnects that stood in the way of making the case for these tickets.

First, certainty about long each task would really take was distributed across the dev team, the person estimating could be missing a key piece of information. Before dev race, my team tried to advocate for these tickets by bundling several into one project. But bundling increased risk and estimate variance. One or two of the small tickets always ended up being a boondoggle that required refactoring of some deep legacy code, causing the whole bundle to take much longer than anticipated and significantly decreasing return on time.

Secondly, knowledge about the value of small tasks was distributed across the stakeholders. Everything was important according to the teams, so nothing stood out as the most important. Leadership wasn’t excited about these unglamourous incremental improvements because the returns weren’t as obvious. And tickets that affected multiple teams suffered from the tragedy of the commons, no team wanted to spend their limited capital advocating it if only half of the benefit went to them.

Our Head of Product constantly worked to understand both sides of this equation, but it was still a game of telephone and information was being lost. The system needed an overhaul.

Mechanism Design

Mechanism Design is a way to build systems that leverage distributed knowledge through game theory, to achieve cooperation in situations where individuals (or teams) would otherwise be competing for a limited resource.

For Dev Race, every team got to request as many tickets as they wanted, as long as they were estimated to be 15 minutes or less. Then, few representatives from each team got to assign 30 impact points each across the tickets submitted by all teams. Over a 2 hour lunch hackathon, developers would compete to win these impact points by completing tickets. Rather than framing these tasks as unglamourous chores, everyone was excited to compete, and teams felt like their voices were being heard!

Each team had limited points and had to do the tough work of weighing each ask against all their other small-ticket priorities. Before this exercise, we were hearing that everything was important! Through this exercise we discovered that some tickets were worth 10 or 20 times more than others to these teams. Tickets that represented a small value across multiple teams were at the top of the list for the first time.

Developers got to pick which tickets they worked on, and could throw a ticket back into the pool if they discovered it was slowing them down too much. Before, we would only find out that a ticket had become a boondoggle after hours of work had been sunk in. Some of the high value tickets even turned out to be 5 minute tasks, and the boondoggles didn’t slow anyone down. The 20% of unfinished tickets turned out to be the ones that would have taken 80% of the time.

The point of the prizes was to align the developers’ incentives with maximum value to the teams, not to create a competitive atmosphere. The prizes were really just tokens, less than $10 each, and every person who competed got a prize of some sort. It was a friendly atmosphere with lots of knowledge sharing and collaboration, and we encouraged support engineers, designers, QA staff, and anyone with an interest to compete.